Hello! I am a ML/CV Engineer. I did my MSc. in AI at Korea University (2023-25), advised by Dr. Seong-Whan Lee.

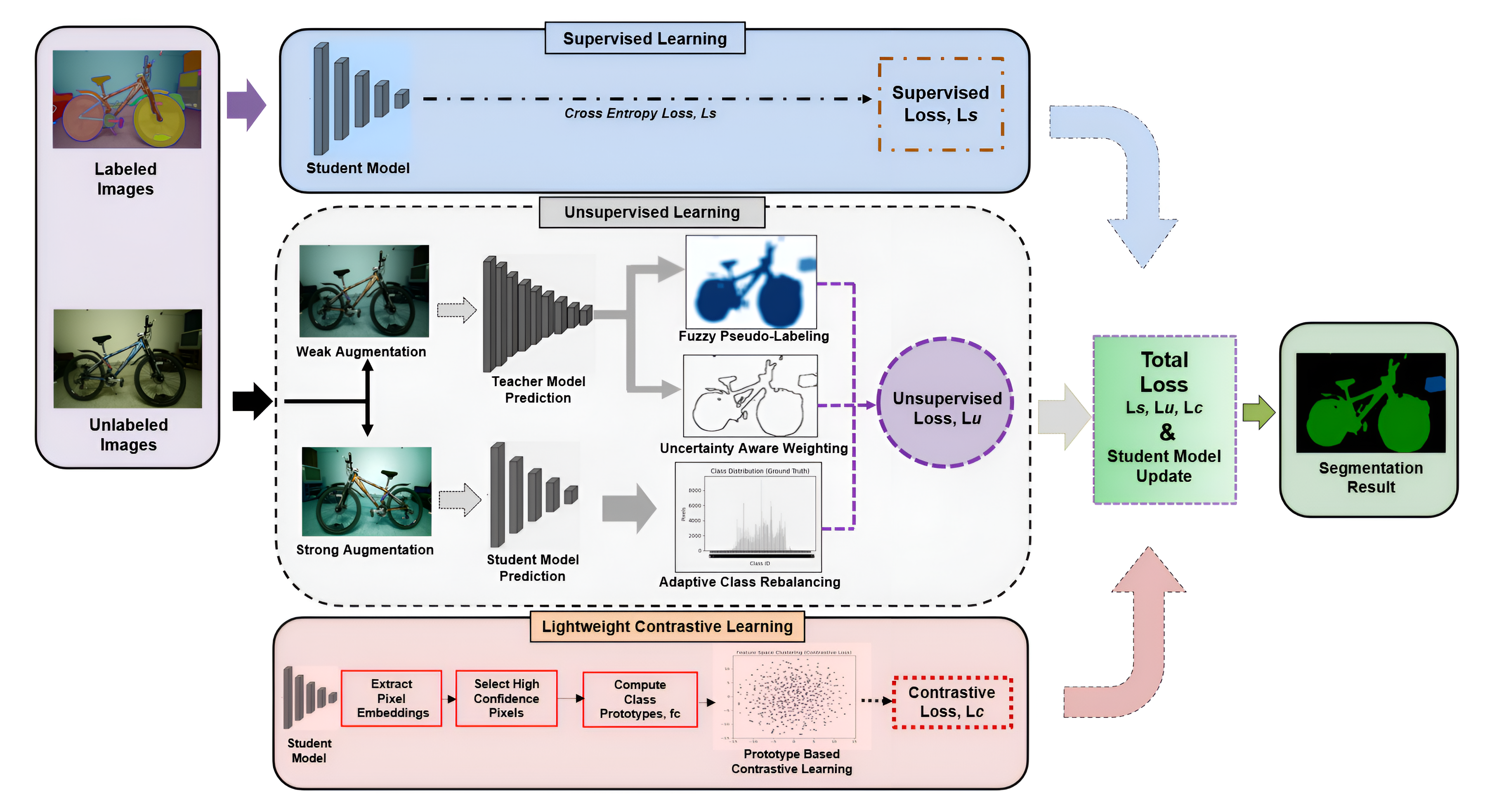

I have 4+ years of experience in computer vision, deep learning, robotics and software engineering. My most recent work has been deploying image & video segmentation models.

Aside from Semantic segmentation, I also work on Depth estimation, Instance retrival, Dense matching and Sparse matching. I also have research experience in Big Data and Reinforcement Learning.

If you want to discuss anything; please feel free to reach me :)

Email / CV / Google Scholar / LinkedIn / GitHub